#

2023

#

December

#

12th December 2023

CoreMelt has released CoreMelt Manager v1.1.4.

You can learn more here.

MotionVFX has released mFilmLook v2.0.6 with the following fixes:

- Improved stability and robustness when using multiple instances of the plugin.

You can learn more here.

Scott Simmons has updated his ProVideo Coalition article:

It's worth a read to hear more details about how to speed up exports with simultaneous processing in Final Cut Pro for Mac.

You can also read about it in the Final Cut Pro manual here.

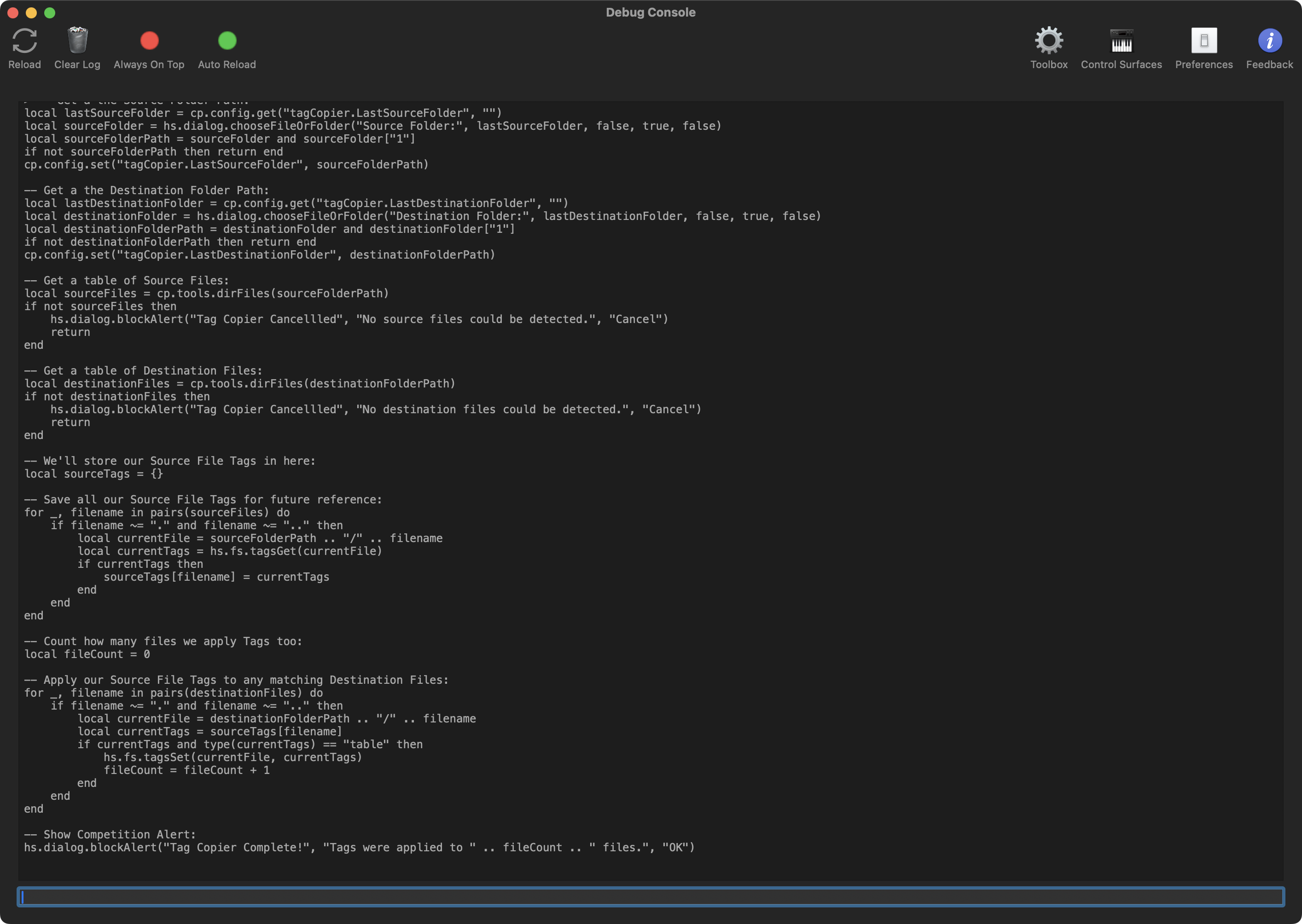

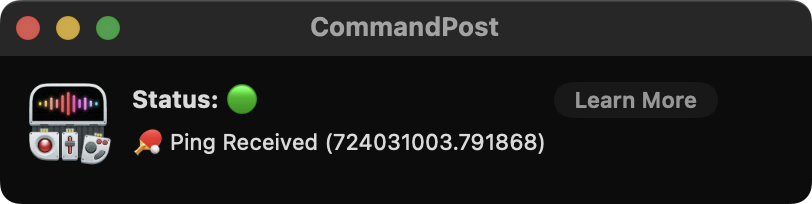

One of the things I really LOVE about CommandPost is how quick and easy it is to build automation tools.

For example, last night Nguyễn Quốc Hoàng contacted me asking the following:

Hi Chris, wanna ask if you know any app that can transfer / copy tags-metadata from original clips to trimmed clip (based on filename).

My problem is, after the project is finish and I trimmed all the project video files into a smaller segments to archive.

My metadata, finder tags are lost.

This is the perfect problem for something like CommandPost to solve, via it's powerful Lua scripting.

Here's the solution I quickly came up with (which was posted here on the CommandPost GitHub Discussions):

-- Get a the Source Folder Path:

local lastSourceFolder = cp.config.get("tagCopier.LastSourceFolder", "")

local sourceFolder = hs.dialog.chooseFileOrFolder("Source Folder:", lastSourceFolder, false, true, false)

local sourceFolderPath = sourceFolder and sourceFolder["1"]

if not sourceFolderPath then return end

cp.config.set("tagCopier.LastSourceFolder", sourceFolderPath)

-- Get a the Destination Folder Path:

local lastDestinationFolder = cp.config.get("tagCopier.LastDestinationFolder", "")

local destinationFolder = hs.dialog.chooseFileOrFolder("Destination Folder:", lastDestinationFolder, false, true, false)

local destinationFolderPath = destinationFolder and destinationFolder["1"]

if not destinationFolderPath then return end

cp.config.set("tagCopier.LastDestinationFolder", destinationFolderPath)

-- Get a table of Source Files:

local sourceFiles = cp.tools.dirFiles(sourceFolderPath)

if not sourceFiles then

hs.dialog.blockAlert("Tag Copier Cancelled", "No source files could be detected.", "Cancel")

return

end

-- Get a table of Destination Files:

local destinationFiles = cp.tools.dirFiles(destinationFolderPath)

if not destinationFiles then

hs.dialog.blockAlert("Tag Copier Cancelled", "No destination files could be detected.", "Cancel")

return

end

-- We'll store our Source File Tags in here:

local sourceTags = {}

-- Save all our Source File Tags for future reference:

for _, filename in pairs(sourceFiles) do

if filename ~= "." and filename ~= ".." then

local currentFile = sourceFolderPath .. "/" .. filename

local currentTags = hs.fs.tagsGet(currentFile)

if currentTags then

sourceTags[filename] = currentTags

end

end

end

-- Count how many files we apply Tags too:

local fileCount = 0

-- Apply our Source File Tags to any matching Destination Files:

for _, filename in pairs(destinationFiles) do

if filename ~= "." and filename ~= ".." then

local currentFile = destinationFolderPath .. "/" .. filename

local currentTags = sourceTags[filename]

if currentTags and type(currentTags) == "table" then

hs.fs.tagsSet(currentFile, currentTags)

fileCount = fileCount + 1

end

end

end

-- Show Competition Alert:

hs.dialog.blockAlert("Tag Copier Complete!", "Tags were applied to " .. fileCount .. " files.", "OK")You can literally just paste this into the CommandPost Debug Console, and away you go!

You can learn more about CommandPost's Lua Scripting here.

Alex Raccuglia is the genius behind Ulti.Media, based in Italy. He's a commercial director and software developer.

If you haven't already, you can read his INCREDIBLE Developers Case Study about Transcriber on FCP Cafe here.

Whilst you're there, you should also read Demystifying Final Cut Pro XMLs by Philip Hodgetts & Dr Gregory Clarke from Intelligent Assistance.

Alex makes some incredible tools such as:

- Transcriber

- BeatMark Pro

- FCP Diet 2

- Ulti.Media Converter 2

- FCP Video Tag

- FCP Cut Finder

- FCP SRT Importer 2

- FCPX AutoDuck

- Subtitles Extractor

- Smart Video Splitter

- Cleanterview

- ...and many more audio, After Effects, podcasting and utilities!

If you haven't already subscribed, Alex has been doing an super fun and entertaining YouTube series, called The Morning Rant.

You can find all the previous episodes on his YouTube Channel here.

He recently just posted this great rant:

The YouTube description says:

The main focus of the video is on my exploration of integrating automatic ducking into Final Cut Pro. I reflect on my past app, FCPX AutoDuck, and express the desire to enhance its functionality by making it work within Final Cut Pro. I detail the complexities of the task, involving parsing FCP XML and dealing with intricate timelines, nested timelines, and various editing techniques. I acknowledge the difficulty of achieving automatic ducking within Final Cut Pro, emphasizing the absence of this feature even in the latest versions.

I highlight my commitment to this project as a side venture while prioritizing the release of FCP Pause Cutter in 2024. Despite the challenges and doubts, I share my progress on Twitter, showcasing a potential icon for the app. I express skepticism about the feasibility of creating a seamless and efficient automatic ducking feature but remain dedicated to personal research in this area.

The video concludes with a mention of a pre-production meeting in Milan and my reluctance to take on low-budget, demanding projects. I express concerns about the future of Final Cut Pro, hinting at a possible decline.

Here's a full transcript of the video, created using Transcriber (so if there's any typos - Alex can only blame himself, haha):

Hi there, welcome to the morning rant.

I know that I treat this show as a podcast because you know this should be a YouTube show but I talked to this strange camera on my car as I will talk to a microphone to a simple microphone doing a podcast and that's a problem because this is not a podcast but I treat it as a podcast because I'm a podcaster since 2011 and so after something like more than a thousand episodes I recorded sometimes even longer than one hour so this is my comfort zone.

A podcast is my comfort zone.

Doing video is something like I do to force myself to look into a camera and try to do something not so ugly.

So today I want to talk about what happened yesterday and because it can be interesting for everybody who's trying to understand why something happens and something doesn't happen in the realm of a Final Cut Pro.

Okay so yesterday was a very strange day because the night before yesterday I couldn't sleep because my son had a little problem trying to sleep and so it was a blank night.

I don't know how to translate this into English but me and my wife didn't sleep almost anything and yesterday was a strange day because I couldn't go to the office.

I took one day off because you have to do a lot of things related to my son.

We had to do vaccination, having a meeting with the teachers of the kindergarten.

So doing a lot of stuff and I didn't have much time to focus on anything.

I spent something like one hour in the office with my wife in my wife's office trying to produce something but I couldn't focus on anything and so I started doing a little research and considered that I spent one hour but it was segmented in maybe three parts because twice we had to go to the bar and take some coffee.

In Italy, Espresso is something like a religion and we like coffee.

I like coffee and then we took a lot of coffee yesterday because we needed to stay awake.

I tried to do some research because I created many apps that communicate with Final Cut Pro in the sense of passing data to Final Cut Pro.

You know, you prepare something into my apps and then the apps prepare something and send the data to Final Cut Pro and then you can finish the work on Final Cut Pro.

So the workflow works on my app and then after computation, decision making and something like this, the final work can be done, should be done and must be done in Final Cut Pro.

There are a lot of apps that work inside of Final Cut Pro or using Final Cut Pro data and so they can take data from Final Cut Pro, computate them and then send them back to Final Cut.

Okay, there are a lot of stuff like this.

I think that Bitmark Pro can work like this.

Choose one music, you choose a lot of clips and then you can send all the stuff to Bitmark Pro to create a new timeline with the beats in sync with the music and then edit it in sync with the music and send the final result back to Final Cut Pro.

Now Bitmark works all in one direction.

You create all the documents, you load the music, you load the assets into Bitmark Pro and then Bitmark Pro creates this timeline and then it sends it to Final Cut Pro.

And I say to myself it would be nice to understand, to learn how to send data from Final Cut Pro to my app, to my apps and then send them back.

And so I started doing a little research.

Everything started because I needed to do an automatic docking.

My first app was FCPX AutoDock.

It does automatic docking outside Final Cut Pro because you load the speaker track and then the audio, the music track and then it produces a new timeline with the audio, with the music track, with keyframes that lower the music and raise it up to make automatic docking.

So when there's someone speaking or talking, the music goes down and then after the speech, after the talking, the music returns up in a very elegant and seamless way.

It's not an automatic docking that works in one direction because it goes backwards.

It analyzes the clusters of the talking and then starts lowering the music before the talking.

It's something like it goes backward in time.

It can do this because it works on files and not in real time.

And I needed to do something like this and then I said to myself "Ok, this is an app that is out since 2017.

I don't know.

It's one of my first apps I ever developed.

The interface is old, maybe ugly.

It works.

It still sells today because it's useful.

But it will be super, super, super, super, super, super, super useful if it can work inside Final Cut Pro, take in a timeline with many dialogues, stuff and many musics and then do automatic docking on the complete timeline.

And I said to myself "Ok, let's try to research this as a side-side project because I'm very focused to put out on the market FCP Postcutter that will be my super big app of the 2024 because it's a super big app.

It's super powerful.

But I said to myself "Ok, let's do this some kind of side-side project while I'm working in the office of my wife." Doing this kind of stuff is hard.

It's very, very hard.

I tried to understand if there's some library, some framework on GitHub to parse the FCP XML because Final Cut Pro communicates with other apps via an XML.

A subset of an XML or an extension of XML, that is FCP XML.

Something like a complete and total description of what happens in the timeline, in the event, in the library.

Almost everything that you can think about can be described in this XML.

It's a very huge and complex file.

It's something like an old kind of file because today everything is done by a JSON.

But once, a lot of time ago, before the introduction of JSON, we used XML.

It's beautiful that today we have JSON because XML is a pain in the I know what and you know what.

So I start trying to understand if there's someone who developed something like a parser for doing this.

And then I said to myself, "This thing is very complicated." Because think about a simple timeline, okay? You have a lot of dialogue.

You're doing something like an interview and then you have 10, 20, 50 little clips or segments of dialogue.

And then you have a background music.

So the music starts and then after 10 seconds of music, after the introduction, you have the first dialogue.

And then the music should go down and then should go up and that should go down to not interfere with the dialogue.

Then the dialogue finishes and then the music goes on for other 10 seconds.

The music goes up and then it fades out.

This is a very simple timeline.

To produce something like an automatic dacking, you have to do tons of stuff.

You have to recreate inside your app.

Inside my app I have to recreate the structure of the timeline with all the segments because you don't take a full clip without an in point or an out point.

You have to take dozens, hundreds of segments, shift them in time, understand if there's dialogue in this segment and then doing the same for the music or the musics, parsing what is dialogue and what is music but this can be done by other roles.

And then recreate a very complex structure of dialogues, segments of dialogues and music segments of music to produce this unique dialogue segment and a unique music segment, add keyframes and then send it back to Final Cut Pro.

It's not complex, it's almost impossible.

It's very, very hard and that's the reason nobody ever done an automatic dacking inside Final Cut Pro because it's hard.

And this is one of the easiest way of having a timeline.

What if you have a nested timeline, some compounds, secondary storylines, compounds with compounds of compounds, synchronized clip or if you do fade in and fade out of the music, crossfades.

There's a ton, a ton, a super ton of way of doing a single simple timeline, a common timeline, a normal timeline that you will do in your project.

And doing an automatic dacking for this is... it's a pain in the ass.

I don't know how to do this.

I'm trying to do this as a personal research, to do dacking with maybe one or two, let's keep it simple, one or two musics and a bunch of clips in the main timeline without compounds, without synchronized clips or without multicam.

Trying to understand if this is feasible as a personal research.

Yesterday I put on Twitter "okay I'm researching this kind of stuff with a version 2 of FCP Autodack" and I showed the ideal icon of the app, an icon that I developed for an episode of my podcast, TechnoPills.

It's in Italian, sorry for everybody who doesn't understand Italian.

And because I developed an autodacking feature for my app Ultimedia Converter and it works, it's cool, it's powerful, it's... yeah! Using artificial intelligence Firefly by Adobe I created this icon with a dack with a lot of speakers and it's beautiful.

And so I said to myself if I'll ever do an automatic dack, a version 2 of autodacking, I will use this as an icon.

So I think this is a some kind of a joke because I'm quite sure that I will never do an app that can do this in a seamless way, in an efficient way, in some way, because it's too hard to produce an automatic dacking.

Think about it, even Apple didn't do an automatic dacking for Final Cut Pro because there's so much you have to understand, to learn, to figure out.

It's almost impossible.

But they should do this.

I expect this is an upcoming version of Final Cut Pro but I'm working with an automatic dacking feature since 10.4 and now we have 10.7.

A huge improvement with the scrolling timeline for you! And no automatic dacking.

I don't know, sometimes I think that FCP is a die-hard platform but I keep it to myself because I base all my business on Final Cut Pro.

Well, I think that's all for today.

Now I have to run in the rain in my car towards Milan because I have a pre-production meeting for a very very very super strong low-budget product production that I don't want to do.

And I said to myself, you know, never accept this kind of works anymore because working with so little margins and with clients is so demanding, with an arrogant way.

It's something that hurts my stomach and then I need a lot of malox to survive.

Ok, that's it, now I run to this meeting and cross your finger for me and I'll keep you updated with the things that I will discover in my research trying to understand how Final Cut Pro thinks using XML.

It's 2023 and everybody should not use XML anymore.

That's all for today and as always I say... Ciao! Oh, remember to comment this video, like this video, subscribe to the channel, blah blah blah, all the stuff that all the good YouTubers do.

I don't do this because I'm not a YouTuber, I'm a podcaster.

This is just a video podcast.

I know that it will be more, more, more interesting doing this as an audiophile but you know what, I'm trying to experiment.

Ciao!

It's a great video, and it's inspired me to reply in more depth, here at FCP Cafe...

I absolutely love all of Alex's tool - I have personally bought them all, and use them all the time - especially Transcriber, BeatMark Pro and FCP SRT Importer 2.

However, one complaint I've always had is that Alex's tools don't use Workflow Extensions.

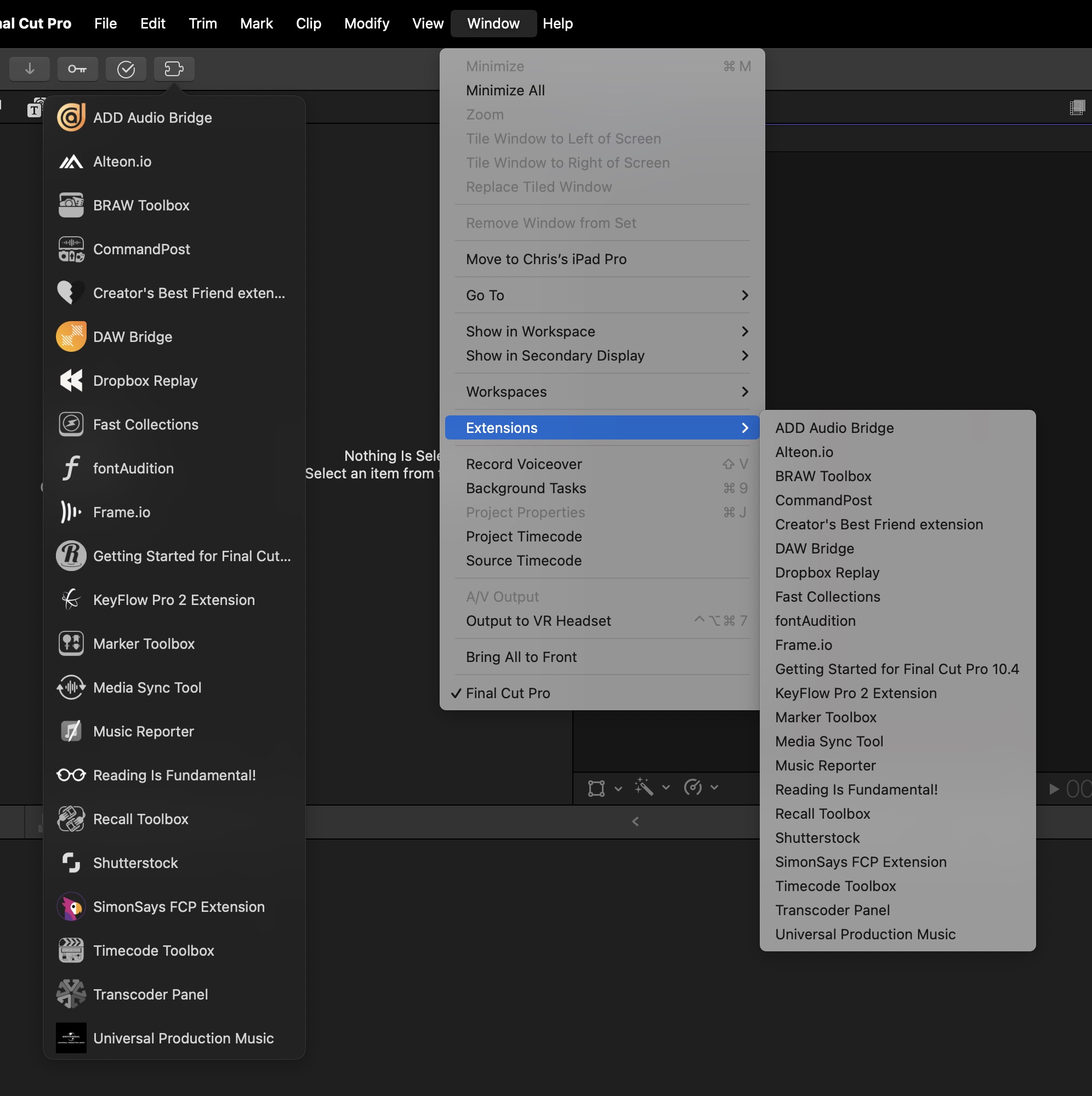

A Final Cut Pro Workflow Extension is a feature in Apple's Final Cut Pro that allows third-party developers to integrate their tools and services directly into the Final Cut Pro interface, enhancing the functionality and efficiency of the editing process.

They provide seamless integration as they appear as panels within the Final Cut Pro interface. This means editors can use third-party tools and services without leaving Final Cut Pro, maintaining a smooth and uninterrupted editing workflow. Their window position also gets saved in any saved Workspaces.

There is a diverse range of applications, and can vary widely in function, including tools for media asset management, stock footage libraries, music services, transcription services, and more. This variety allows editors to tailor their workflow according to their specific needs.

You can find Workflow Extensions on the Mac App Store, but they can also be released outside of the Mac App Store.

FCP Cafe keeps track of all the available Workflow Extensions here.

By having these additional tools integrated directly into the editing environment, editors can save time and effort. Tasks like searching for media, managing files, or transcribing audio can be done quickly and efficiently within Final Cut Pro.

I personally have a LOT of Workflow Extensions installed:

From a developers perspective, a Workflow Extension is basically just a custom view that you can put whatever you like inside it.

When you drag something from the Final Cut Pro Browser to any text box (i.e. TextEdit), what it's actually dragging is FCPXML data (try it yourself to see).

Because of this, you can easily drag objects from the Final Cut Pro Browser to your Workflow Extension, and vice versa.

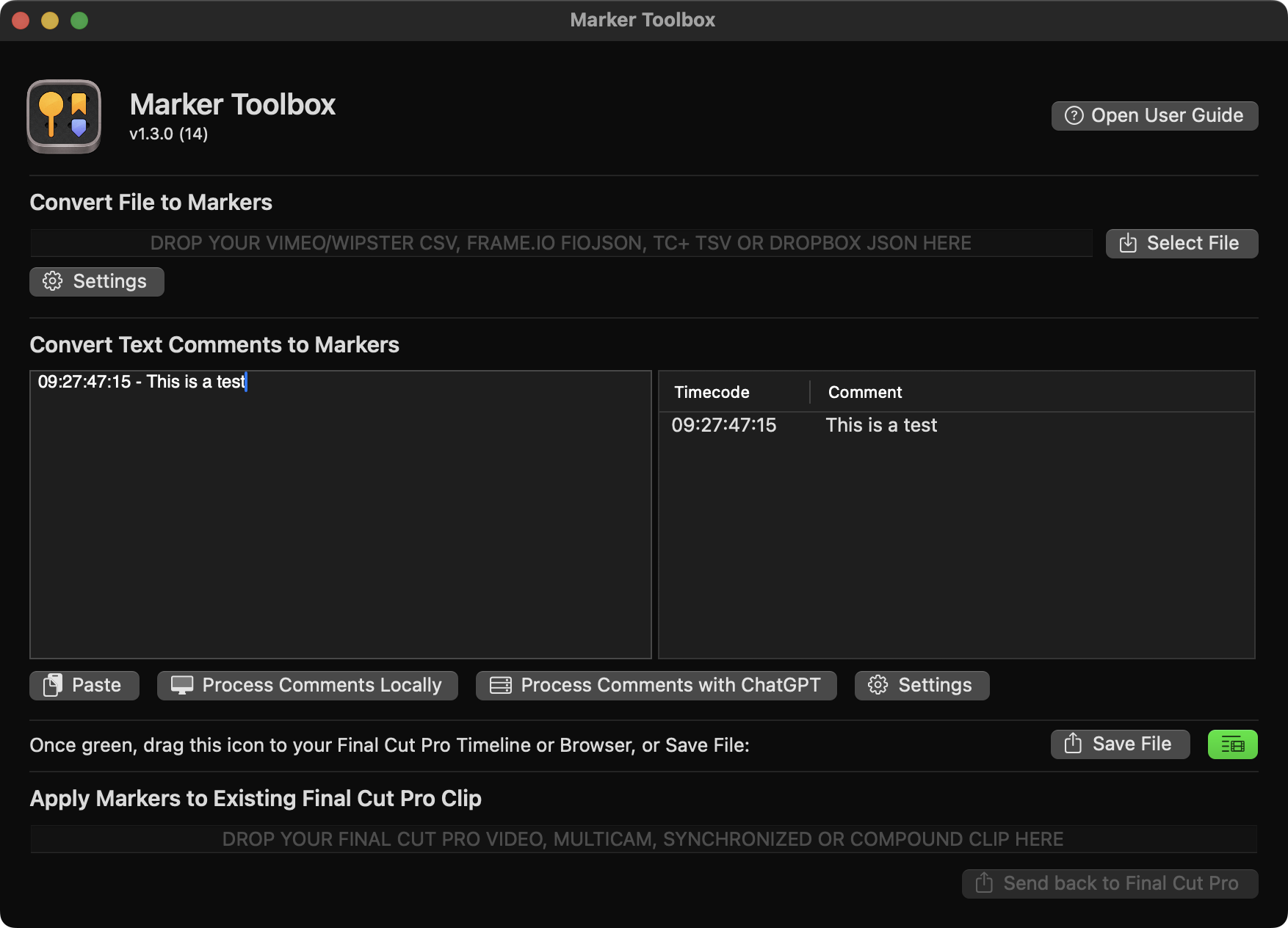

For example, in Marker Toolbox you can drag your markers from the Workflow Extension back into your Final Cut Pro timeline as a Compound Clip (drag the green icon):

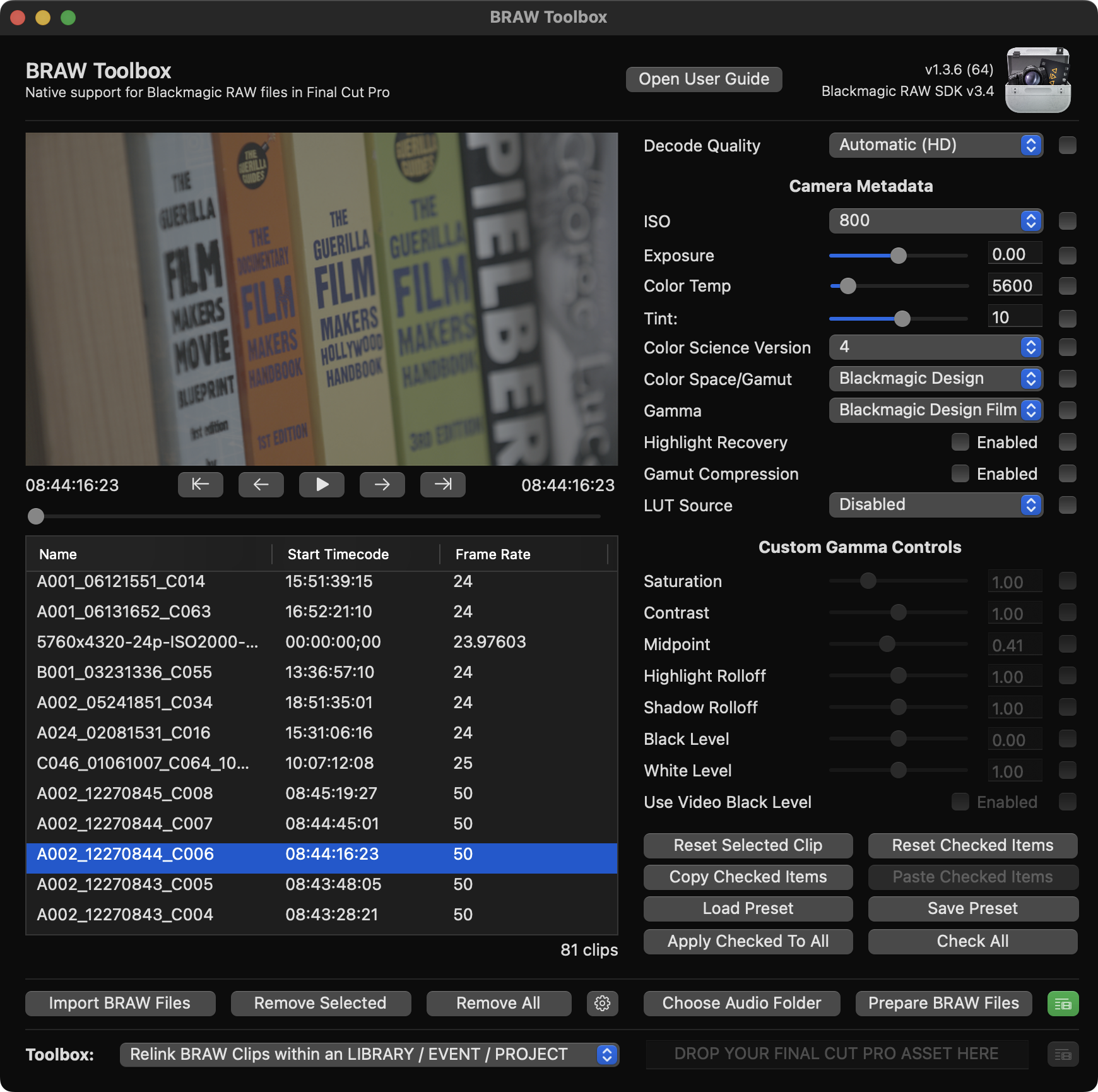

In BRAW Toolbox once you've imported all your BRAW footage, you can drag an Event from the Workflow Extension back into your Library:

You can also drag Libraries, Events and Projects back into the Workflow Extension via the Toolbox at the bottom of the user interface, for doing things like exporting a FCPXML for DaVinci Resolve.

You can also trigger a limited number of things via the Workflow Extension API.

For example:

We use this API to allow CommandPost to programmatically move the playhead via the CommandPost Workflow Extension:

This Workflow Extension is super simple - it's just in Objective-C, and uses web-sockets to communicate back and forth between the Workflow Extension and CommandPost.

You can check out the source code on GitHub here.

Controlling the playhead via the API is a lot smoother and reliable than pressing virtual shortcut keys, or triggering menubar items.

But aside from that - that's really all Workflow Extensions can do. They're just a dumb window that a developer can control essentially.

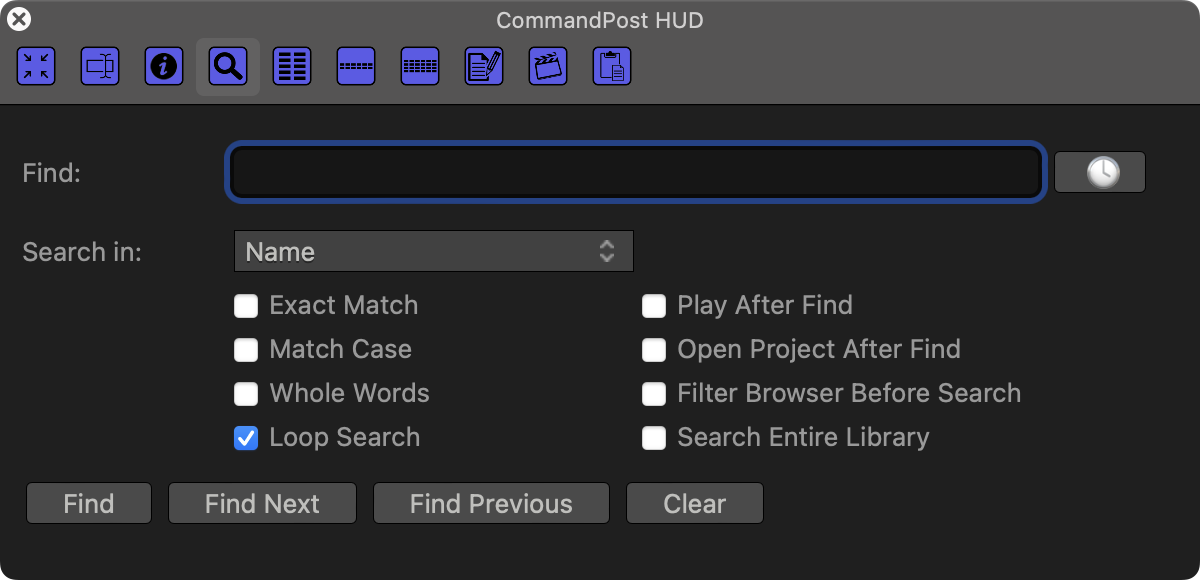

In fact, we decided NOT to use a Workflow Extension for the CommandPost HUD - because there was really no advantage:

However, jumping back to Alex's video - I'd LOVE for Transcriber and BeatMark Pro to be quickly accessible from directly within Final Cut Pro.

I'd love to be able to just drag in an music track from the Browser into a Workflow Extension, do the processing, then drag the clip back into my timeline with markers added.

This is all possible, and somewhat easy to do.

You can learn more about Workflow Extensions on FCP Cafe here.

But Workflow Extensions are only one technology that third party Final Cut Pro developers get access to...

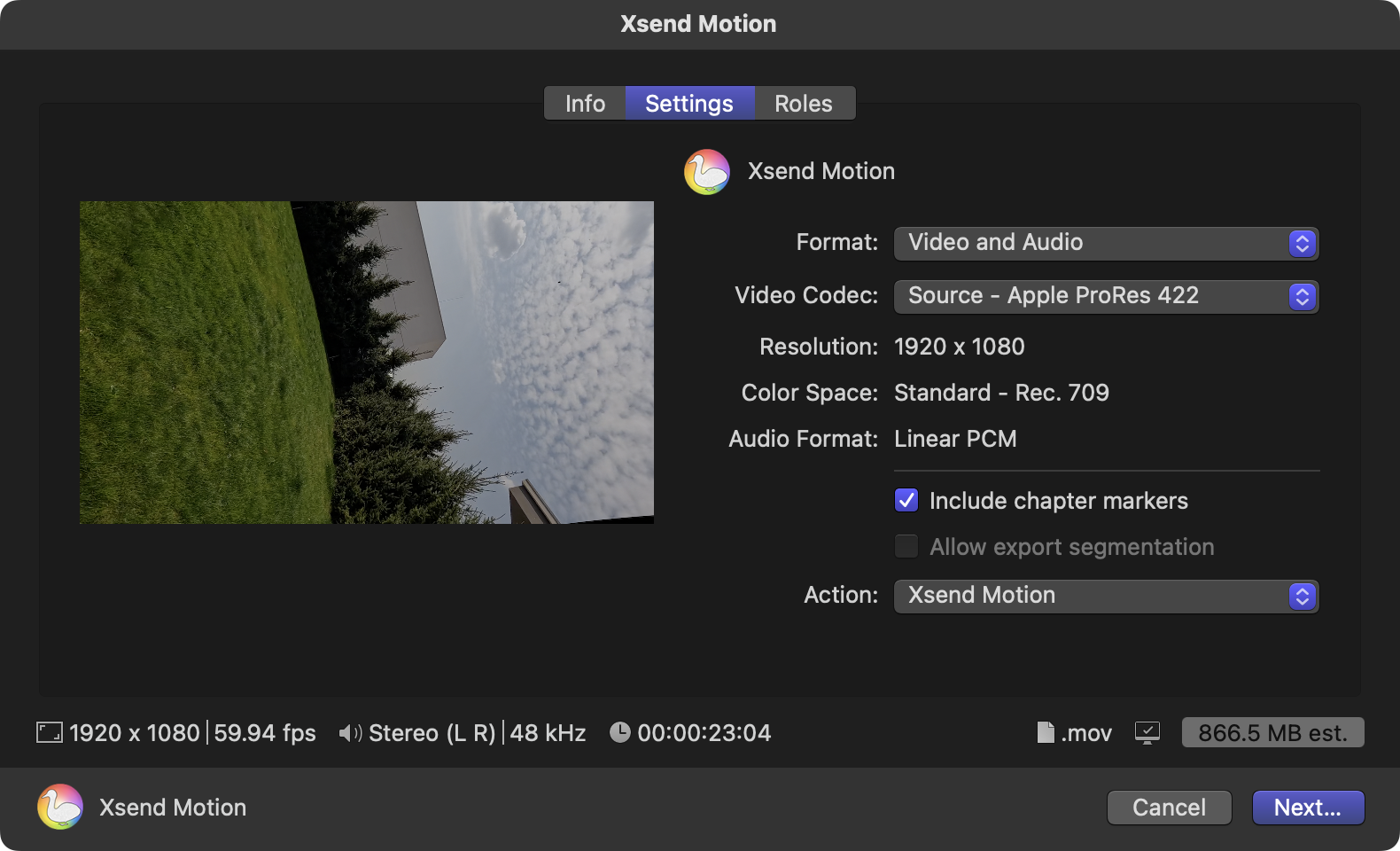

There's also Custom Share Destinations, which is something I've been digging into deeply recently, to help out Vigneswaran Rajkumar for his exciting upcoming app, Marker Data.

Sadly, Custom Share Destinations are terribly documented.

Essentially they're a way to "trigger" external apps once a render/export is complete - for example, this shows how Xsend Motion is triggered:

Final Cut Pro sends Apple Events to the third party application - and there's back and forth communication between Final Cut Pro and the third party app.

Thankfully, I happened to have a very old Apple sample project saved in my backups that demonstrates how this works - which has since sadly disappeared from the Internet.

I've uploaded the Objective-C example here.

I've also started attempting to port this example to a more modern Swift - but it's early days. You can track my progress here.

You can learn more about Custom Share Destinations on FCP Cafe here.

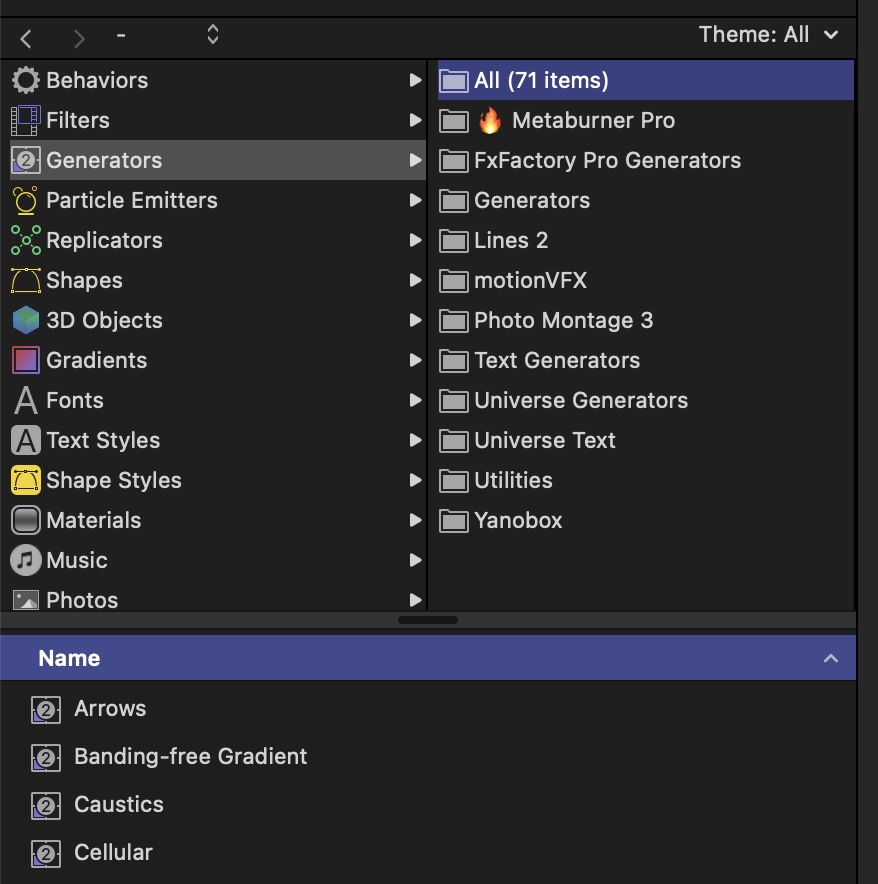

And last, but not least, we have FxPlug.

FxPlug is a powerful plugin architecture designed by Apple, specifically tailored for Final Cut Pro and Motion.

At its core, FxPlug enables third-party developers to create advanced effects, such as custom filters, transitions, and generators, expanding the capabilities of Final Cut Pro beyond its native features.

One of the key advantages of FxPlug is its support for high-bit-depth rendering, ensuring that the effects and transitions maintain the highest image quality without degradation. This is particularly important for professionals who require precision and high fidelity in their video projects. Additionally, FxPlug offers real-time performance, a crucial aspect for efficient workflow, allowing editors and motion graphic designers to preview effects without the need for time-consuming rendering.

Another significant aspect of FxPlug is its compatibility with Apple's Metal framework. This integration leverages the full power of modern Mac hardware, delivering accelerated performance and smoother playback, even with complex effects and high-resolution footage. The use of Metal also means that FxPlug plugins can harness the capabilities of the latest graphic processors, further enhancing the efficiency and speed of video editing tasks.

It provides a wide range of customizable parameters for each plugin, giving users complete control over the look and feel of the effects. This level of customization allows for greater creative freedom, enabling editors to achieve unique visual styles tailored to their specific project needs.

FxPlug is a robust and versatile plugin architecture that significantly enhances the capabilities of Final Cut Pro and Motion. Its support for high-bit-depth rendering, real-time performance, integration with Apple's Metal, and customizable options make it an essential tool for video editing professionals seeking to create high-quality, creative, and visually stunning projects.

Essentially though... FxPlug is basically just Filters & Generators for Apple Motion:

You can't directly use a FxPlug in Final Cut Pro - you need to make an Apple Motion template that uses it.

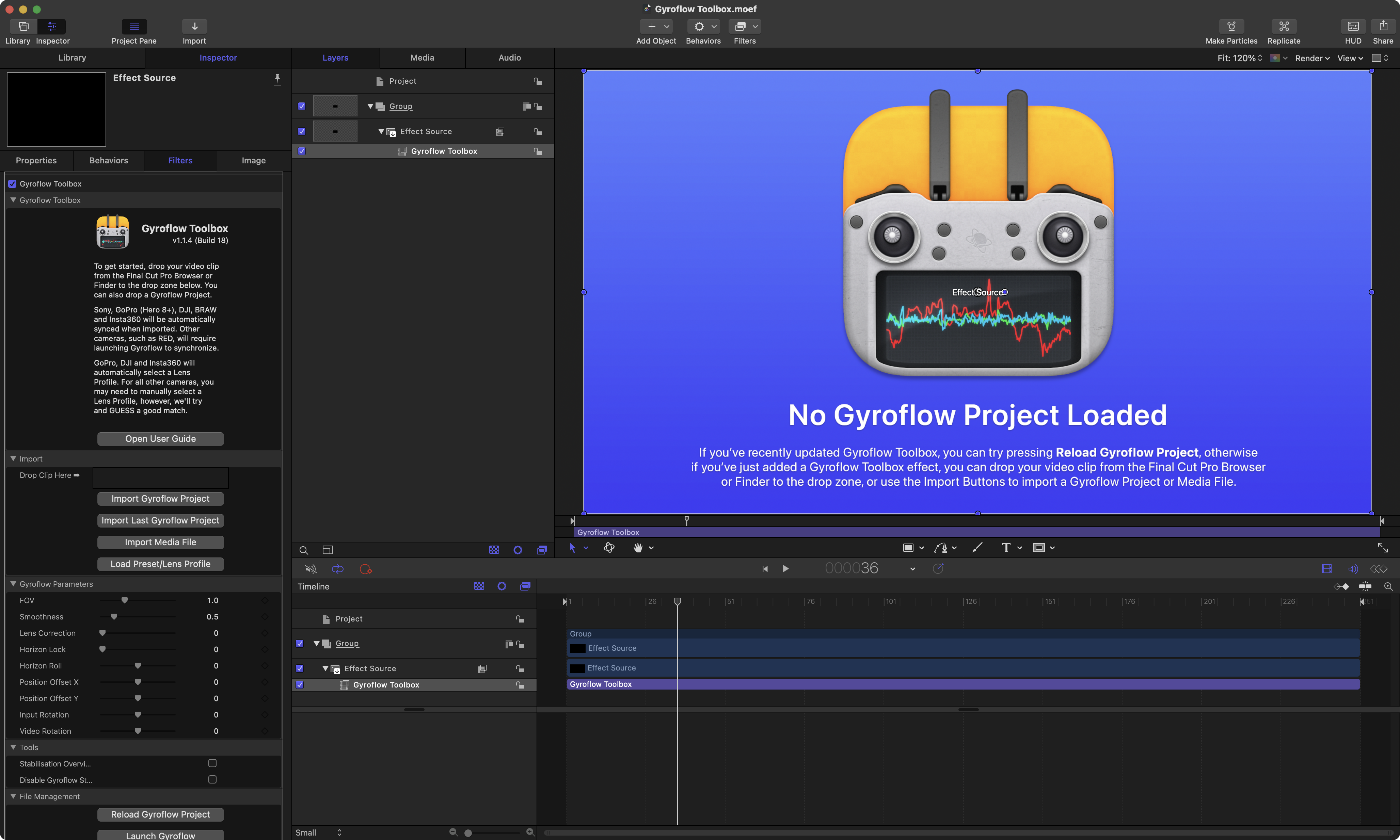

BRAW Toolbox and Gyroflow Toolbox both use FxPlug under the hood.

Here's a look at the BRAW Toolbox Motion Template:

Here's a look at the Gyroflow Toolbox Motion Template:

Gyroflow Toolbox is open source, so you can view the source code on GitHub here.

FxPlug is fairly flexible in that you can add your own custom parameters, and basically do whatever you want to the image.

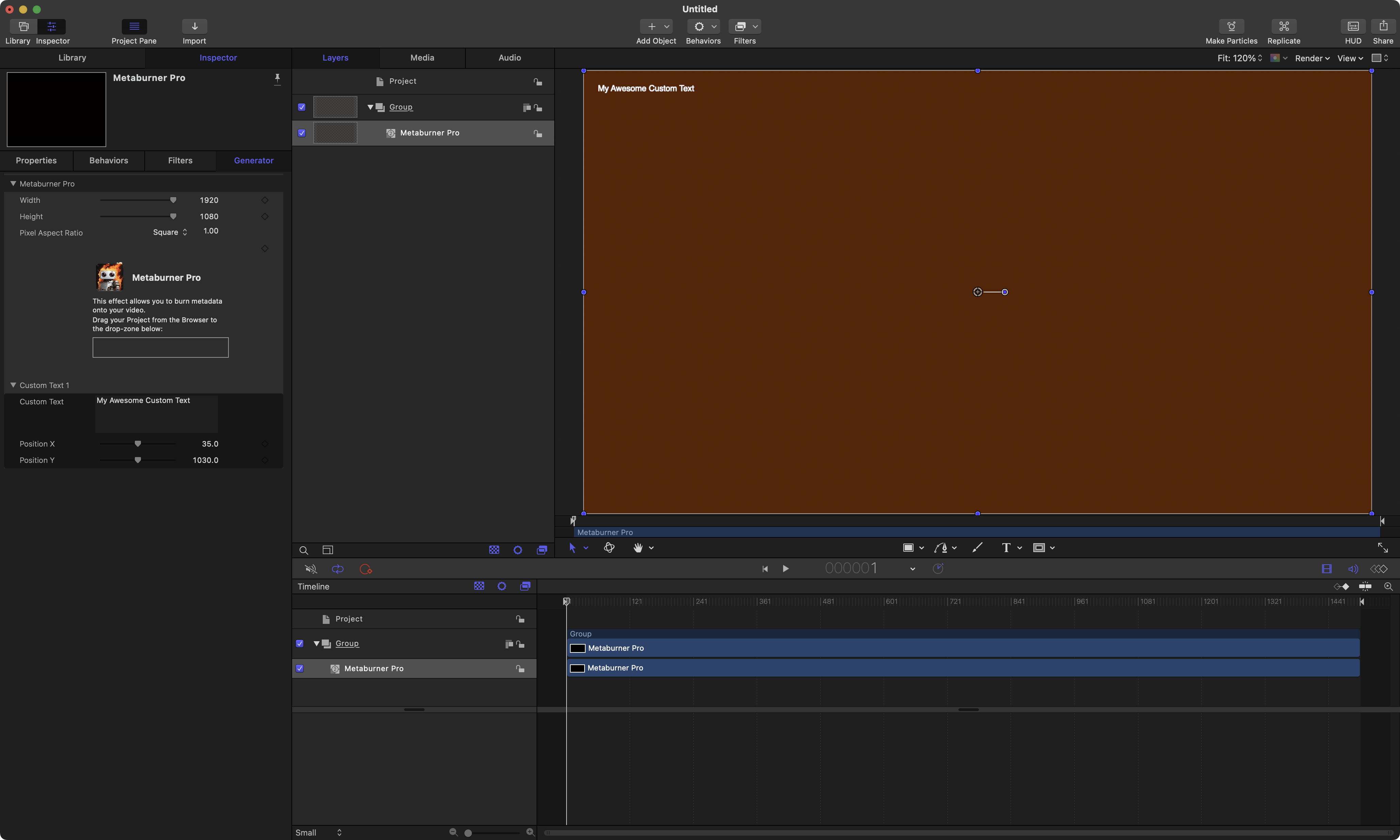

I'm currently building a new app called Metaburner Pro which is using FxPlug on Swift:

You can learn more about FxPlug over at FCP Cafe here.

You can find a list of third party Motion Templates (some of which use FxPlug) here.

Ok, so you have Workflow Extensions, Custom Share Destinations, FCPXML and FxPlug.

There are third party frameworks for dealing with FCPXML too...

Pipeline Neo is a Swift framework for working with FCPXML files easily.

Originally developed by Reuel Kim and it is no longer maintained. Pipeline Neo was created as a spin-off project to fix and update the library when necessary.

You can learn more here. I've personally never really used it.

There's also DAWFileKit - a Swift library for reading and writing common import/export file formats between popular DAW and video editing applications with the ability to convert between formats.

DAWFileKit is made by Steffan Andrews, who is an absolute genius.

I've never personally used DAWFileKit, but I intend to play soon.

You can learn more here.

You also have Audio Units - which are basically system wide audio plugins that work in ProTools, Adobe Audition, Logic Pro, etc. You can use Audio Units on both Mac and iPad/iOS, which is interesting.

I've never personally played too much with Audio Units, as it's a GIANT rabbit hole, as you can see from the documentation.

Finally going back to Alex's video...

I totally think that Transcriber and BeatMark Pro could easily be ported to a Workflow Extension and improved to make it simpler and easier for Final Cut Pro editors.

In terms of bringing FCPX AutoDuck to a Workflow Extension, that's definitely possible too, but as Alex explains, you'd basically need to recreate Final Cut Pro's entire audio pipeline to mimic what Final Cut Pro does in FCPX AutoDuck.

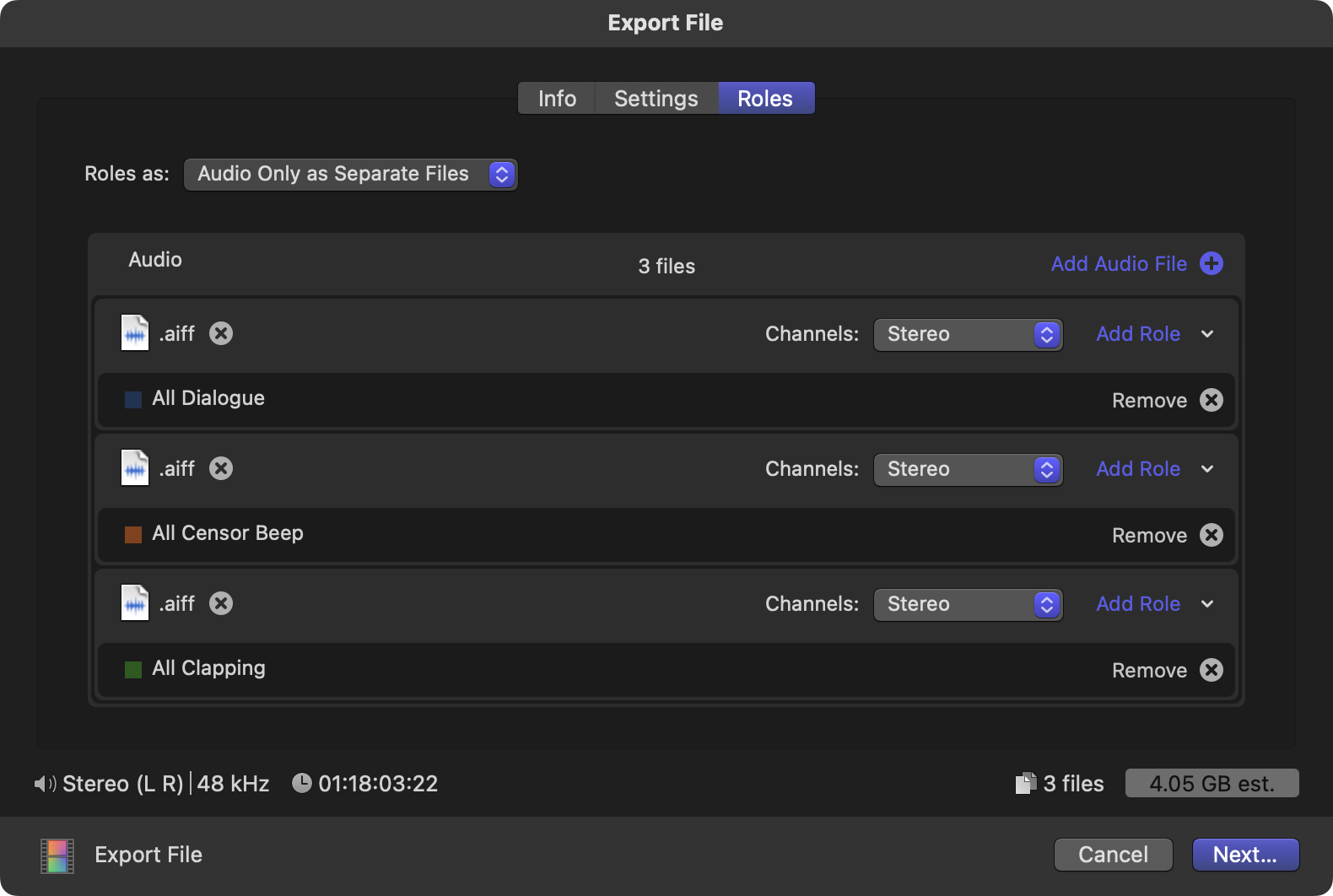

One potential workaround could be to create a Custom Share Destination, allowing you to export AIFFs of dialogue, music and effects separately, and then the Workflow Extension uses these mix downs for the processing, and then applies the audio adjustments to a Compound Clip of all the audio clips in that role.

Because you can create a Custom Share Destination that can export roles as separate audio files, the user would just need to export to a single Share Destination, then at the end of the export/render, you could programmatically send these files to the Workflow Extension for processing. Once processing is complete, the user could drag a new project from the Workflow Extension to their Final Cut Pro Browser, which would have Compound Clips of the individual audio roles, and levels/effects applied to those compound clips.

It's slightly complex - because you need to communicate between the Custom Share Destination and the Workflow Extension, but certainly achievable.

But I totally agree with Alex in that CMTime is really hard and FCPXML can get insanely confusing.

For example, this is how we get the "current frame number" in Gyroflow Toolbox and BRAW Toolbox:

//---------------------------------------------------------

// Load the timing API:

//---------------------------------------------------------

id<FxTimingAPI_v4> timingAPI = [_apiManager apiForProtocol:@protocol(FxTimingAPI_v4)];

if (timingAPI == nil) {

//---------------------------------------------------------

// Show error message:

//---------------------------------------------------------

NSString *errorMessage = @"Unable to retrieve FxTimingAPI_v4 in pluginStateAtTime.";

NSLog(@"[Gyroflow Toolbox Renderer] %@", errorMessage);

if (error != NULL) {

*error = [NSError errorWithDomain:FxPlugErrorDomain

code:kFxError_FailedToLoadTimingAPI

userInfo:@{

NSLocalizedDescriptionKey : errorMessage }];

}

return NO;

}

//---------------------------------------------------------

// Load the Parameter Retrieval API:

//---------------------------------------------------------

id<FxParameterRetrievalAPI_v6> paramGetAPI = [_apiManager apiForProtocol:@protocol(FxParameterRetrievalAPI_v6)];

if (paramGetAPI == nil) {

//---------------------------------------------------------

// Show error message:

//---------------------------------------------------------

NSString *errorMessage = @"Unable to retrieve FxParameterRetrievalAPI_v6 in pluginStateAtTime.";

NSLog(@"[Gyroflow Toolbox Renderer] %@", errorMessage);

if (error != NULL) {

*error = [NSError errorWithDomain:FxPlugErrorDomain

code:kFxError_FailedToLoadParameterGetAPI

userInfo:@{

NSLocalizedDescriptionKey : errorMessage }];

}

return NO;

}

//---------------------------------------------------------

// Create a new Parameters "holder":

//---------------------------------------------------------

GyroflowParameters *params = [[[GyroflowParameters alloc] init] autorelease];

//---------------------------------------------------------

// Get the frame to render:

//---------------------------------------------------------

CMTime timelineFrameDuration = kCMTimeZero;

timelineFrameDuration = CMTimeMake( [timingAPI timelineFpsDenominatorForEffect:self],

(int)[timingAPI timelineFpsNumeratorForEffect:self] );

CMTime timelineTime = kCMTimeZero;

[timingAPI timelineTime:&timelineTime fromInputTime:renderTime];

CMTime startTimeOfInputToFilter = kCMTimeZero;

[timingAPI startTimeForEffect:&startTimeOfInputToFilter];

CMTime startTimeOfInputToFilterInTimelineTime = kCMTimeZero;

[timingAPI timelineTime:&startTimeOfInputToFilterInTimelineTime fromInputTime:startTimeOfInputToFilter];

Float64 timelineTimeMinusStartTimeOfInputToFilterNumerator = (Float64)timelineTime.value * (Float64)startTimeOfInputToFilterInTimelineTime.timescale - (Float64)startTimeOfInputToFilterInTimelineTime.value * (Float64)timelineTime.timescale;

Float64 timelineTimeMinusStartTimeOfInputToFilterDenominator = (Float64)timelineTime.timescale * (Float64)startTimeOfInputToFilterInTimelineTime.timescale;

Float64 frame = ( ((Float64)timelineTimeMinusStartTimeOfInputToFilterNumerator / (Float64)timelineTimeMinusStartTimeOfInputToFilterDenominator) / ((Float64)timelineFrameDuration.value / (Float64)timelineFrameDuration.timescale) );

//---------------------------------------------------------

// Calculate the Timestamp:

//---------------------------------------------------------

Float64 timelineFpsNumerator = [timingAPI timelineFpsNumeratorForEffect:self];

Float64 timelineFpsDenominator = [timingAPI timelineFpsDenominatorForEffect:self];

Float64 frameRate = timelineFpsNumerator / timelineFpsDenominator;

Float64 timestamp = (frame / frameRate) * 1000000.0;

params.timestamp = [[[NSNumber alloc] initWithFloat:timestamp] autorelease];HUGE props to Dr Gregory Clarke for all the INSANE work he does with FCPXML - again, another absolute genius. It's a LOT of work - just look at how many updates XtoCC and SendToX have had over the years!

Hope this extended "rant" is useful! Any questions, please leave them in the Discussion below. Thanks team!